Pydantic AI + Vercel AI SDK: My Favorite Tech Stack for Building AI Agents

📖 New Ebook Available

Build Your First MCP Server: A Developer's Guide to Wrapping Existing APIs for AI Agents to Use

Learn to create powerful AI integrations step by step

If you just want the example code and don't want to read, the link to the GitHub repo is here: Pydantic AI + FastAPI + Vercel AI SDK + React

The Honest Truth: I love Pydantic AI, but it's only available in Python. I love the Vercel AI SDK, but it's only available in TypeScript. This forced me to bridge two worlds that don't naturally talk to each other.

After building several AI agents and experimenting with different frameworks, I've settled on a stack that combines the best of both ecosystems: Pydantic AI + FastAPI + Vercel AI SDK + React TypeScript.

Why Pydantic AI? What is it?

Anthropic's recent "Building Effective Agents" blog post offers sage advice about starting simple and understanding what's under the hood. I completely agree - which is why I gravitated toward Pydantic AI after trying other frameworks:

CrewAI pushed the multi-agent "roles" narrative too hard for my taste. It also didn't work with the types of MCP Servers I gravitate towards: Stateless HTTP Streaming Remote MCP Servers.

LangGraph had a terrible developer experience. The documentation was lacking, it required registering for an API key to even run locally, and the vibes were just off. Having never loved LangChain, LangGraph felt very LangChain-esque.

Pydantic AI was a breath of fresh air. Look at this Hello World example and how simple it is:

from pydantic_ai import Agent

agent = Agent(

'google-gla:gemini-1.5-flash',

system_prompt='Be concise, reply with one sentence.',

)

result = agent.run_sync('Where does "hello world" come from?')

print(result.output)

That's IT. And adding tools, dynamic system prompts, and structured responses using Pydantic is incredibly easy.

Why This Stack? The Trade-offs

What I Had to Hack Together:

- Streaming compatibility between Pydantic AI and Vercel AI SDK

- Tool call handling across the language boundary

- Custom stream conversion to make everything work seamlessly

The Good Parts:

- Both ecosystems have excellent hot reloading (FastAPI + Vite)

- uv and Bun are both fantastic package managers

- Amazing developer experience on both sides

The Trade-offs:

- No shared type safety across the stack

- Having to maintain code in two languages

- Python and TypeScript don't naturally communicate well

- More complexity than a single-language solution

Why This Post Exists: I built this example repository to show how to make these two amazing but separate ecosystems work together effectively, despite their incompatibilities.

The Tech Stack

Backend (Python)

- Pydantic AI - Type-safe AI agent framework with structured outputs

- FastAPI - Modern, fast web framework for building APIs

- uv - Blazing fast Python package manager

Frontend (TypeScript)

- React 19 - Modern UI library with hooks

- Vite - Lightning fast build tool

- Vercel AI SDK - Streaming AI interactions

- Bun - Fast package manager and runtime

What makes Pydantic AI special is how simple it is. Unlike LangChain or other frameworks that feel overly complex, Pydantic AI gets out of your way and lets you build agents that feel like normal Python code.

Adding MCP Servers is Incredibly Simple

One of my favorite aspects of Pydantic AI is how easy it is to integrate with stateless or stateful Remote Streaming HTTP MCP Servers. Here's all it takes:

# Set up MCP server connection

server = MCPServerStreamableHTTP(

url="https://outlook-remote-mcp-server.onrender.com/mcp",

headers={"X-API-KEY": mcp_api_key},

)

agent = Agent(model, toolsets=[server], system_prompt=system_prompt_content)

That's it. You can instantly expand your agent's capabilities by connecting to any MCP server. These are the types of MCP Servers I wrote about in my latest eBook "Building your first MCP Server", where I showed how to wrap existing APIs using Bun TypeScript with minimal dependencies.

Model Provider Choice

I use Groq Cloud with the Qwen3 32B model in my example repository, but you can use any provider that Pydantic AI supports. Check out the full list at https://ai.pydantic.dev/api/providers/.

Why Groq + Qwen3 32B? It's cheap and decent at native tool calling.

Using a Different Provider? Just update your environment variables to match the provider's API key name, modify the pydantic-settings configuration, and update the model name.

Try It Yourself

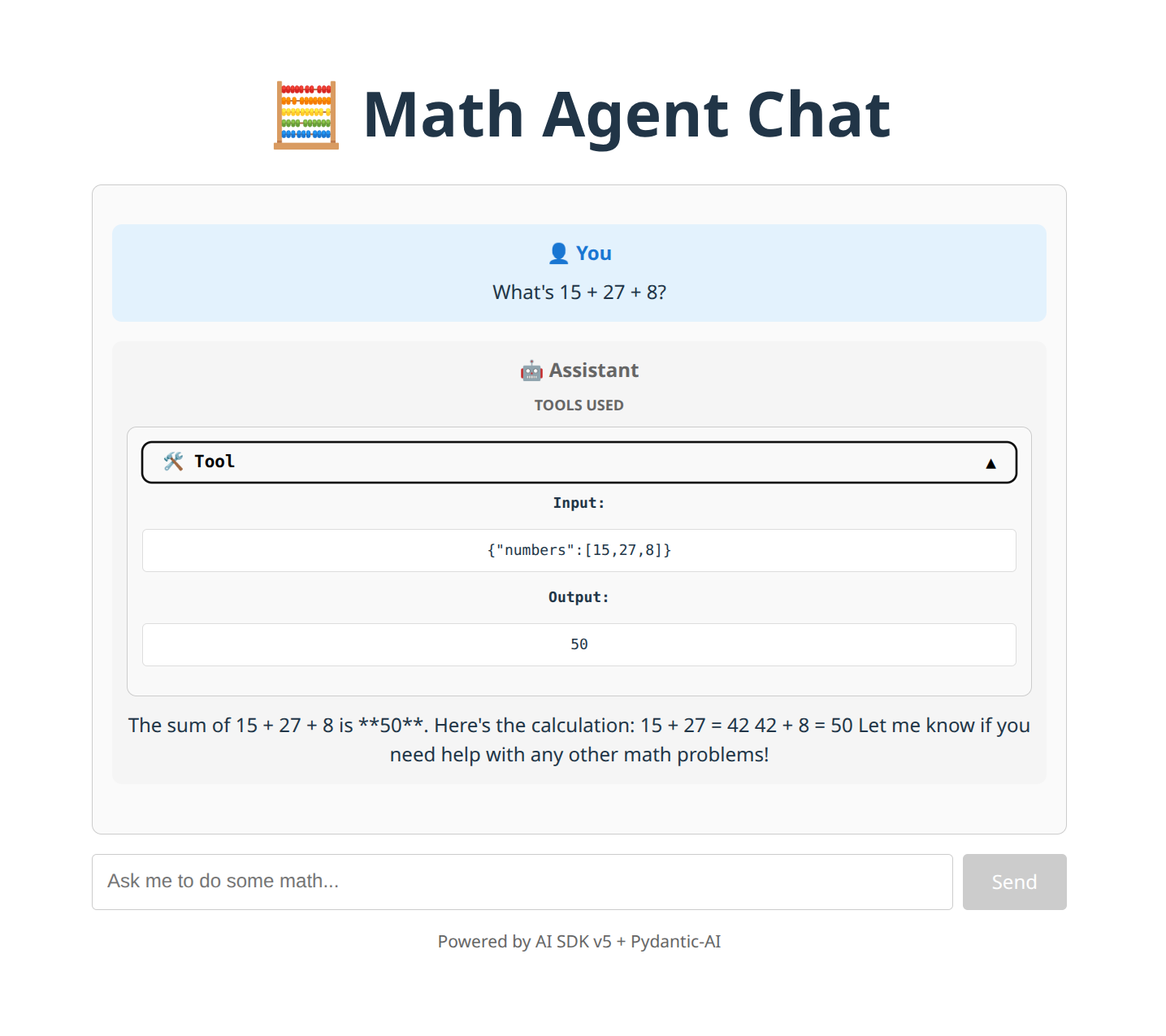

Want to see how this all works together? I've created a complete example repository that demonstrates this stack: Pydantic AI + FastAPI + Vercel AI SDK + React

The repository includes:

- Minimal FastAPI + Pydantic AI backend with streaming compatibility

- React TypeScript frontend using Vercel AI SDK

- Complete setup with uv and Bun for the best developer experience

- Environment configuration and development scripts

This example is intentionally barebones to show the core logic of connecting a tool-calling Pydantic AI agent to the Vercel AI SDK Version 5. Our internal agent platform at Umbrage has a much more robust, ChatGPT-like UI built with shadcn components and inspired by Vercel's Next.js AI starter kit, but this example strips away production complexity to focus on the essential integration between these ecosystems.

Real-World Example: Our Internal Chat Agents Platform

This stack actually powers the internal Chat Agents platform we built at Umbrage. One of our most useful agents is our Scheduling Agent, built with Pydantic AI, that connects to an Outlook MCP Server to:

- Search employee calendars for availability

- Find meeting slots that work across multiple attendees

- Handle timezone conversions automatically

- Schedule meetings directly in Outlook

- Offer availability windows to external clients

The agent handles complex scheduling logic while employees interact with it through a clean React interface powered by Vercel AI SDK. It's incredibly satisfying to see team members naturally conversing with the agent to coordinate meetings instead of playing email tag.

Why I Keep Coming Back to This Stack

I just love Pydantic AI, but it's only in Python. I love the Vercel AI SDK, but it's only in TypeScript. So I forced them to work together. Despite the complexity of bridging two languages that don't naturally communicate, I keep returning to this stack because it just works - reasonable learning curve, excellent performance, and top-notch developer experience.

Want to Chat About AI Engineering?

I hold monthly office hours to discuss your AI Product, MCP Servers, Web Dev, systematically improving your app with Evals, or whatever strikes your fancy. These times are odd because it's weekends and before/after my day job, but I offer this as a free community service. I may create anonymized content from our conversations as they often make interesting blog posts for others to learn from.

Book Office Hours